|

Getting your Trinity Audio player ready...

|

Top 7 Best Data Science Tools For Data Scientists

Over Last decade data science have emerged really well and it covers most demanding technologies like Big Data, Artificial Intelligence and Even Machine Learning. We are going to discuss about top 7 best data science tools for data scientists.Data science is known to perform analytics, Big Data to derive valuable data from it.

Before proceeding let us see few basic things like what is data science? in other terms what are data science? Who are data scientists? What is Big Data? To better understand the context of top 7 best data science tools for data scientists.

Table of Contents

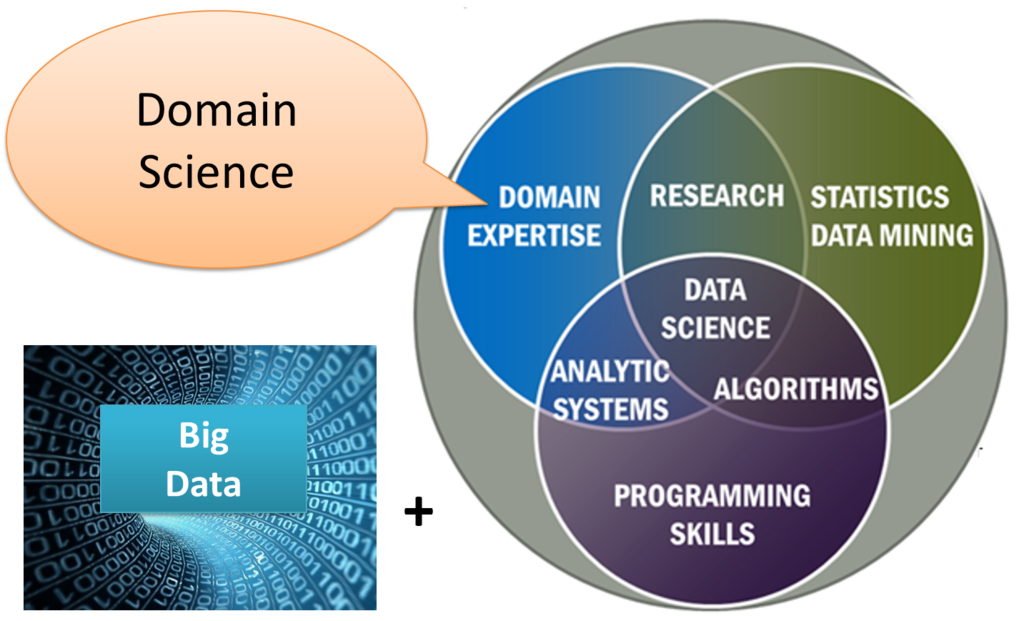

What Is Data Science?

“Data Science is a multidisciplinary study of scientific methods, procedures, algorithms, and systems for extracting knowledge and insights from structured and unstructured data to create comprehensive outcomes for business growth.

In general, “data science” is defined as “a multidisciplinary study concerned with the collection, classification, cleaning, analyzing, storing, retrieval, and analysis of data”.

What is Big Data?

The amount and volume of organized and unstructured data available in data-generating equipment is referred to as “Big Data.”It refers to the amount of data that has been prepared for analysis, aggregation, and processing in order to make decisions.

Who Are Data Scientists?

“A data scientist is a person who generates, uses, and disseminates information for the purpose of gathering, processing, analyzing, and communicating data.”

So now we have covered the foundation stone of data science in brief to have better understanding about data science and data scientist. Now you will feel little comfortable to go ahead about our top 7 best data science tools for data scientists.

Top 7 Best Data Science Tools For Data Scientists

1.Hadoop

One of the most well-known tools for managing huge data is Apache Hadoop.It also enables users to store various types of data, including structured and unstructured data. Data scientists can use Hadoop to reliably spread the processing of enormous volumes of data across clusters of machines.

Although Hadoop is not required to become a Data Scientist, a data scientist must first know how to get the data out in order to do analysis, and Hadoop is the technology that allows a data scientist to work with huge amounts of data.

Hadoop is made up of numerous modules for flexible data processing, including Hadoop Common, Hadoop Distributed File System, Hadoop YARN, and Hadoop MapReduce.

2.MongoDB

MongoDB is a database that allows you to arrange data however you like. It is a NoSQL database, therefore it does not adhere to SQL’s rigid relational format. It reduces complexity by giving capabilities that would otherwise require adding layers to SQL. You may handle a wide range of data and consolidate insights with the dynamic schema.

Cassandra, CouchDB, ArangoDB, Postgre SQL, or DynamoDB are examples of NoSQL solutions that can be used to transfer data to a platform that provides trustworthy data management.

3.MySQL

One of the most in-demand talents in Data Science is SQL. SQL is required to retrieve data from any database it may be MySQL, Oracle Database, PostgreSQL, Microsoft SQL Server, MongoDB, CouchBase, DB2, and any others as well.

In third and fourth place, respectively, were MySQL and Microsoft SQL Server. Several free and open-source database management systems, such as PostgreSQL and Apache Cassandra, remain extremely competitive despite the presence of some of the largest businesses in the computer industry, such as Microsoft, Oracle, and IBM.

We tend to forget that there is no Data Science without data while everyone is so busy learning R or Python for Data Science. MySQL is almost a mandatory skill to be successful as Data Scientist.

4.SAP HANA

SAP HANA (High-Performance Analytic Appliance) is a relational database management system developed and sold by SAP. SAP HANA’s primary job as a database server is to store and retrieve data as needed by a variety of applications.

SAP HANA is an ETL-based replication solution that uses SAP Data Services to transport data to the HANA database from SAP and non-SAP sources. Businesses using SAP in their ecosystem use HANA to process enormous amounts of real-time data in a short period of time.

HANA may not be a tool for independent Data Scientists because SAP is a closed-source ecosystem, but it can be a useful talent to have in company or for SAP careers.

5.HIVE

Apache Hive is a data warehouse software project built on top of Apache Hadoop for data query and analysis. In preparation for Hadoop integration, Hive offers a SQL-like interface for searching data across databases and file systems.

At the data management level, Hadoop, Hive, and Pig essentially work in tandem.Hive is best renowned for its data summaries and data querying. HIVE was created with the goal of allowing users who already know SQL to access and interact with data in the Hadoop ecosystem without having to learn a totally new language.

6.Apache Spark

Apache Spark is a large-scale data processing engine with a unified analytics engine. It is robust because it can develop applications in a range of languages, including Java, Scala, Python, R, and SQL.

Spark is used by data scientists for data purification and transformation, feature engineering, model construction, scoring, and “productionalizing” data science pipelines. Spark, according to what I’ve read, aims to deliver an experience similar to that of Python’s Pandas, which intrigues me.

7.RapidMiner

RapidMiner is a sophisticated end-to-end data mining tool that is open-source and totally transparent. It allows you to quickly develop predictive models. To support a variety of data mining tasks, the tool includes hundreds of data preparation and machine learning techniques.

RapidMiner is a data preparation, machine learning, and model construction tool. It supports a variety of data management approaches as well as machine learning activities such as data preparation, visualization, predictive analysis, and deployment. The Java programming language was used to create this programme.

These are our top 7 best data science tools for data scientists to become successful in their professional journey as a data scientists.

Conclusion

Data Science is a science used to acquire new knowledge, explain situations and improve decision making, looking at data and data sets.To be truly become data scientists you must be able to understand the semantics of data, why it is collected, how it is collected, how it is transformed into useful information and how it is analyzed.The rising demand for Artificial Intelligence (AI) and Machine Learning (ML) solutions is sure to keep these technical specialists in high demand for decades to come.